By IAN FAILES

By IAN FAILES

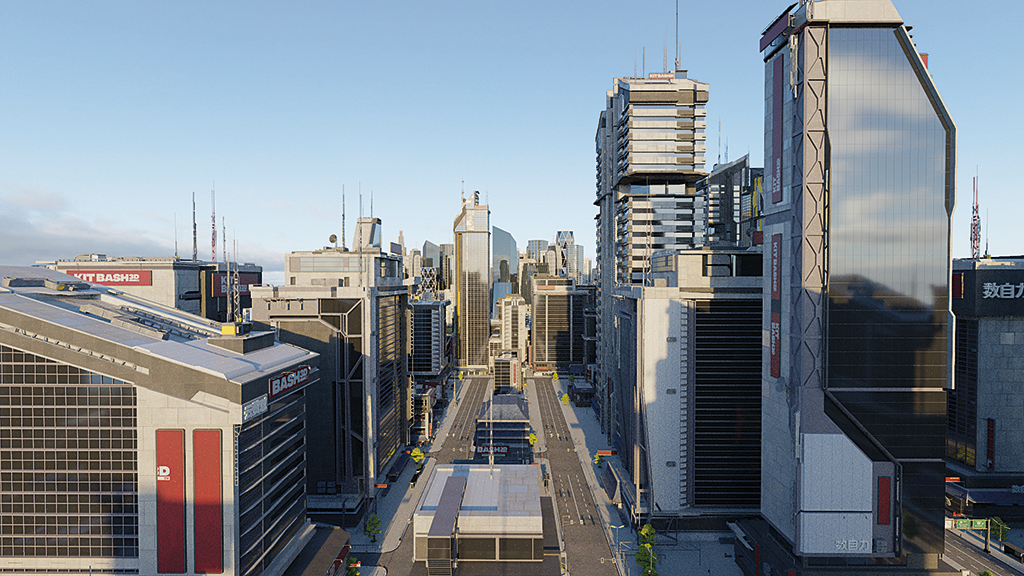

The art and technology of visual effects always seem to be changing, and one technology has brought about rapid change for the industry. That technology is ‘real-time’. For the most part, this means real-time rendering, particularly via game engines and the use of GPUs. Real-time rendering has been used for a great deal of virtual production, for anything from previs, set-scouting, live motion capture, simulcam work, compositing on set, in-camera visual effects, to actual final frame rendering. Some of the many benefits of real-time include instant or faster feedback to cast and crew, and the pushing of more key decisions into pre-production and shooting instead of post.

The landmark projects from this past year to incorporate realtime include The Lion King and The Mandalorian, but it’s a technology that’s permeating a wide range of both large and small VFX productions. Looking to the future, VFX Voice asked a number of companies invested in real-time how their tools and techniques are likely to impact the industry.

Epic Games, the makers of Unreal Engine, is one of the companies at the forefront of real-time. A recent demo set up by Epic Games showed real-time technologies coming together to enable in-camera VFX during a live-action shoot. The demo featured an actor on a motorbike filmed live-action against a series of LED wall panels. The imagery produced on the walls blended seamlessly with the live-action set, and could also be altered in real-time.

“An LED wall shoot has the potential to impact many aspects of filmmaking,” suggests Ryan Mayeda, Technical Product Manager at Epic Games. “It can enable in-camera finals, improve greenscreen elements with environment lighting when in-camera finals may not be feasible, improve the ability to modify lighting both on actors and within the 3D scene live on stage, to modify the 3D scene itself live on stage, for actors to see the environment that they’re acting against in real-time, to change sets or locations quickly, to re-shoot scenes at any time of day with the exact same setup, review and scout scenes simultaneously in VR, use gameplay and simulation elements live on stage and much more.

“Filmmakers love it because they can get more predictability into exactly what a given scene or project will demand,” says Mayeda, on the reaction to the demo. “Art directors get very excited about the virtual location scouting features and the ability to collaborate in real-time with the director and DP. DPs have been blown away by the range of lighting control virtual production affords and the speed at which they can operate the system. VFX teams see the possibility of unprecedented collaboration and more simultaneous, non-linear workflows.”

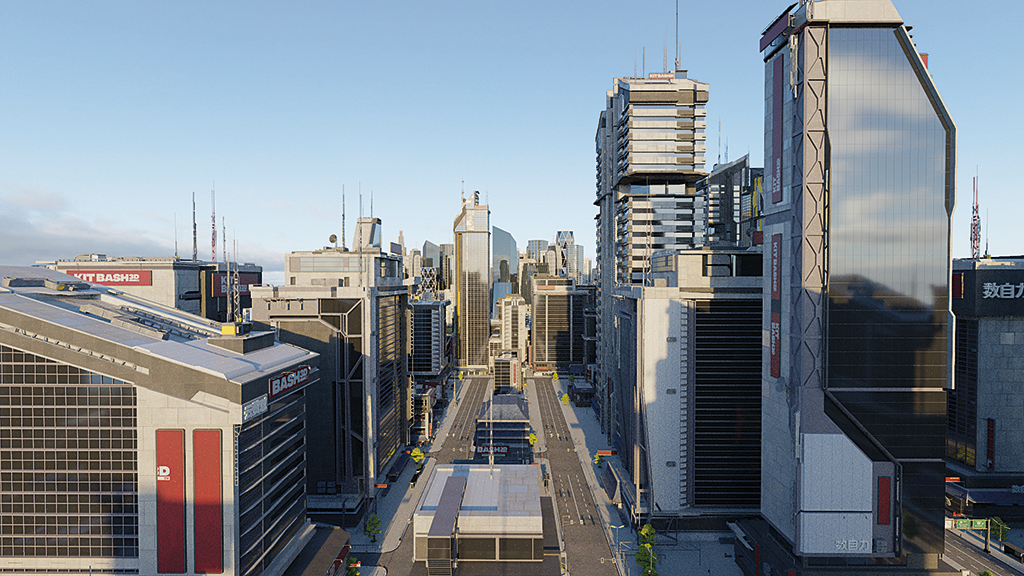

Unity Technologies is another of the big players in real-time rendering and virtual production with their Unity game engine. Like Unreal Engine, Unity is finding an increasing amount of use inside studio production pipelines, whether those studios are making games, experiences or VFX shots. One major area of development for Unity has been in the creation of its Scriptable Render Pipeline, part of its push to make content authoring workflows more accessible for developers.

Unity’s Scriptable Render Pipeline allows developers to adjust and optimize the approach to rendering inside the engine: a AAA game like Naughty Dog’s Uncharted series which uses higher-end hardware like PCs and consoles at 30 frames per second performance has constraints that are quite different from a mobile VR game that might need to run at 90 fps on a medium-end phone, for instance.

“By using the Scriptable Render Pipeline, developers are given a choice of what algorithms to use,” outlines Natalya Tatarchuk, Vice President of Graphics at Unity Technologies. “They can take advantage of our two pre-built solutions, use those pre-built pipelines as a template to build their own, or create a completely customized render pipeline from scratch.

“This level of fine-grained control means that you can choose the solution best suited to the needs of your project, factoring in things like graphic requirements, the platforms you plan to distribute on, and more.”

Advancements in real-time rendering are not lost on companies that have traditionally built offline renderers. The maker of V-Ray, Chaos Group, for example, is in development on its own real-time ray tracing application, dubbed Project Lavina. It allows users to explore V-Ray scenes within a total ray-traced environment in real-time.

“We’ve built this from the ground up to be a real-time ray tracer, so that the speed of it is always going to be the thing that’s paramount,” outlines Lon Grohs, Global Head of Creative, Chaos Group. “The second part of that is that NVIDIA’s new cards and hardware really make a big difference in terms of that speed.

“So, imagine if you’re working with a 3D application and your scene is just ray-traced all the time,” adds Grohs. “There’s no toggling back and forth between all your frames, and as you put on a shader or a material or a light that is what it is, and it’s giving you instant feedback.”

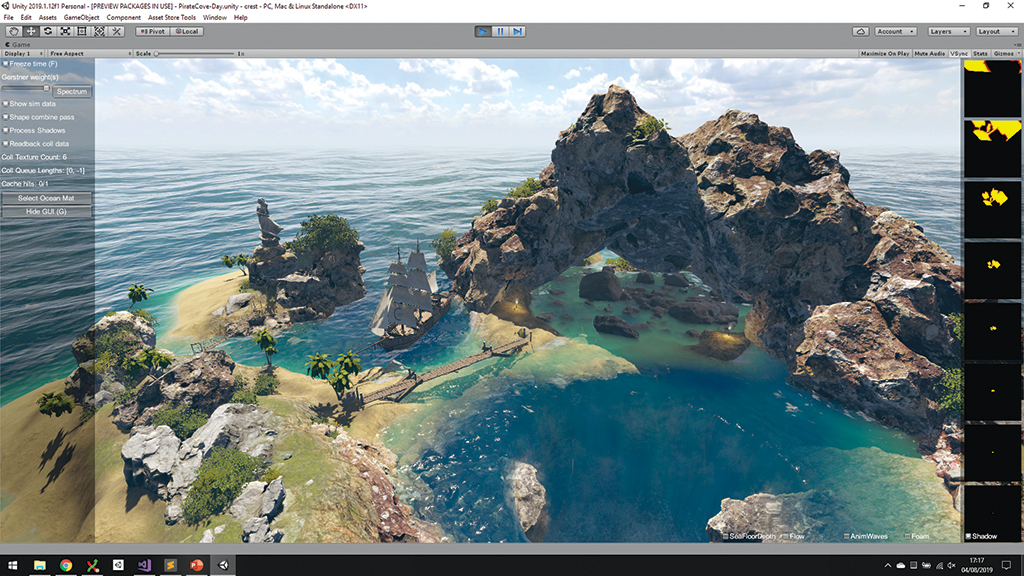

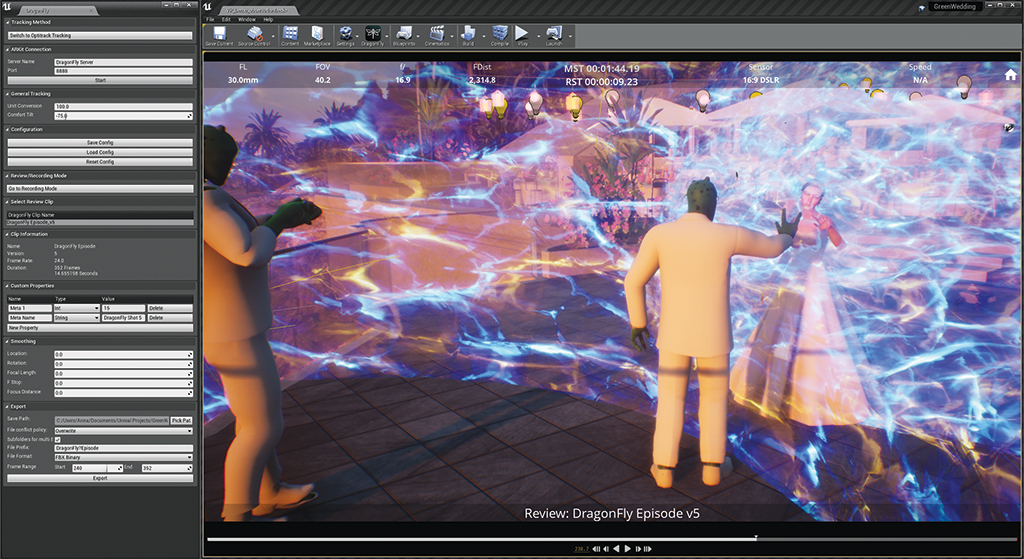

Films known for their adoption of virtual production such as Avatar, Ready Player One and The Lion King largely relied on purpose-built real-time tools and workflows being engineered to help enable, for instance, set scouting, simulcam shooting and VR collaboration. Glassbox is among a host of companies offering more of a virtual production solution ‘out of the box’ for filmmakers.

Their tools (DragonFly and BeeHive), which work with principal content creation software such as Unreal, Unity and Maya, are aimed at letting users visualize virtual performances on a virtual set, for example, by seeing a mythical CG creature fly through a fantasy land in real-time. Secondly, they enable collaboration with a virtual art department and the virtual sets.

“Although techniques like virtual production have existed before,” acknowledges Glassbox Technologies CPO and Co-founder Mariana Acuña Acosta, “studios have not been able to effectively replicate the workflow of a live-action production in VFX, games and VR development without huge investments in bespoke tech and manpower.”

“DragonFly and BeeHive change that,” says Acuña Acosta. “We have created an ecosystem of platform-agnostic tools based on the latest VR and game engine technologies that offer studios of all sizes and all budgets a truly disruptive set of tools capable of transforming filmmaking and content production.”

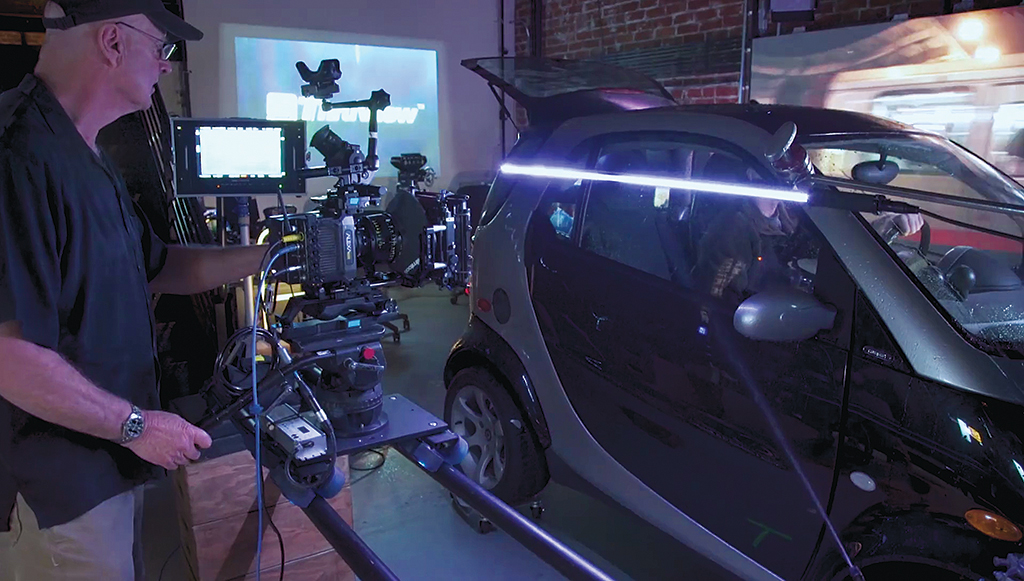

Perhaps one of the ultimate examples of real-time being embraced by visual effects practitioners is Stargate Studios CEO Sam Nicholson’s ThruView system. This is a series of tools that allows a scene to be filmed in live-action but with real-time rendered or pre-rendered CG or pre-composited live-action elements displayed in real-time and captured in-camera.

“For certain applications, like driving, flying, planes, trains and automobiles or set extensions, it is an ideal tool,” argues Nicholson. “What it solves particularly, as opposed to greenscreen, is shallow depth of field, shiny objects, and real-time feedback in your composite image. Rather than trying to make decisions two weeks downstream or someone else making that decision for you, you have all the tools you need on set in one place to create the image that you want to create in real-time.”

For a recent show featuring a train sequence, Nicholson’s team shot real moving train footage with 10 cameras. Then, the 14 hours of plates (200 terabytes worth) were ingested, color-corrected and readied for an on-set train carriage shoot. “We’re playing back 8K on set, using 10 servers serving 40 4K screens, one for each window on a 150-foot-long train set,” explains Nicholson, who notes the real-time setup also allows for live lighting changes, for example, when the train goes through a tunnel.

“ThruView gives you all of the horsepower and the creative tools that you have in a traditional greenscreen composite, but now in real-time,” continues Nicholson. “We’re going after finished pixels, in camera, on set, with the ability to pull a key and do post-production work on it if you want to, but to get you at least 80% of the way there and not have to back up and start over again.”

Real-time technologies are enabling animators and visual effects artists to capture and create characters at faster speeds and at higher levels of fidelity than ever before by sometimes inputting themselves or actors directly into the character. Reallusion’s iClone, for example, enables that ability via its Motion Live plugin.

The plugin aggregates the motion-data streams from an array of industry motion-capture devices, such as tools from Xsens, Noitom, Rokoko, OptiTrack, Qualisys and ManusVR. John C Martin II, Vice President of Marketing at Reallusion, describes how this works. “Choose a character in iClone, use Motion Live to assign a face mocap device, like the iPhone, which uses our LiveFace iPhone app to transfer the data, a body mocap device or a hand mocap device. All are optional or can be used at the same time for full-body real-time performance capture.”

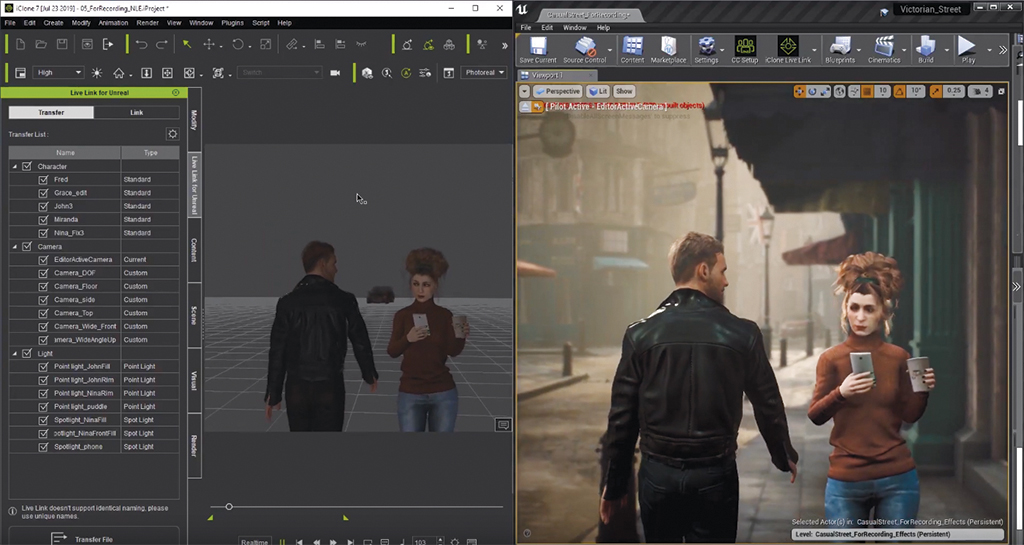

This forms just part of iClone’s larger system for character creation and animation, which also recently was made to connect to Unreal Engine with the iClone Unreal Live Link plugin. “The process begins with our Character Creator 3 content to generate a custom morph and designed character ready for animation,” states Martin. “Animate the characters with iClone and transfer characters, animation, cameras and lights directly to Unreal Engine. FBX can still be imported as always, but Live Link adds so much more to what you can do with the content, especially in real-time.”

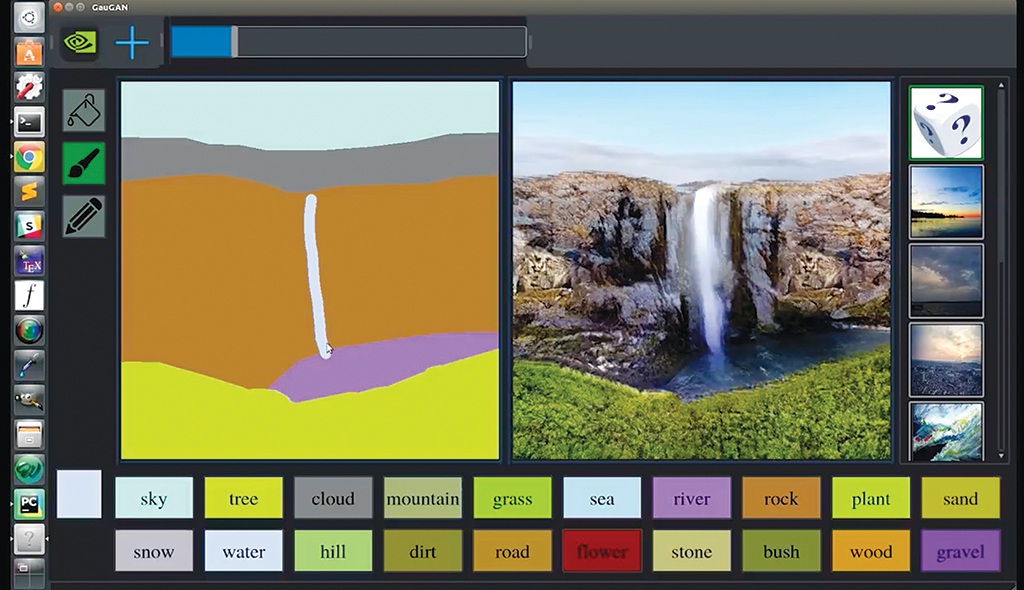

The possibilities of real-time are sometimes still in the research phase. The winner of the SIGGRAPH 2019 Real-Time Live! event, NVIDIA’s GauGAN, is one such technology. It allows a user to paint a simple iteration of a scene, but then instantly see that transformed, via a neural network, into compelling landscapes or scenes. NVIDIA is already a principal provider of graphics cards that power a large amount of real-time rendering, as well as having a significant hand in machine learning research.

“GauGAN tries to imitate human imagination capability,” advises Ming-Yu Liu, Principal Research Scientist at NVIDIA. “It takes a segmentation mask, a semantic description of the scene, as input and outputs a photorealistic image. GauGAN is trained with a large dataset of landscape images and their segmentation masks. Through weeks of training, GauGAN eventually captures correlation between segmentation masks and real images.”

At the moment, this is demo technology – which users have been able to try at NVIDIA events and also online – that could perhaps aid in generating production scenes quickly and accurately from minimal input, although the ‘training’ of GauGAN used around a million landscape images released under common creative license on the internet.

While photoreal digital humans make waves in feature films and on television shows, real-time generated CG humans are beginning to have a similar impact. Digital Domain has capitalized on the studio’s own CG human experience and its internal Digital Human Group to jump into real-time humans.

“We were shocked by how well our real-time engine, Unreal, handled our feature-quality assets,” says Doug Roble, Senior Director of Software R&D at Digital Domain, who performed his own live avatar at TED in 2019. “To generate the look of the real-time versions we used the same resolution models, textures, displacements and hair grooms from our high-resolution actor scans. We did do some optimization but focused on ensuring we kept the look.”

The TED Talk – which had Roble on stage in an Xsens suit, Manus gloves and wearing a helmet-mounted camera that was all fed into Ikinema to solve a ‘DigiDoug’ model running in Unreal – saw his avatar projected in real-time on a screen behind him. Machine learning techniques were able to add to the facial expressions Roble was making.

“All of this data from face shapes to finger motion to facial blood flow all needs to be calculated, transmitted and re-rendered in 1/6th of a second,” notes Roble. “It is truly mind-boggling that it is all actually possible.”