By IAN FAILES

By IAN FAILES

Previs studios have always had to work fast. They are regularly called upon to fashion animatics quickly and make numerous iterations to suit changing scripts and last-minute inclusions. Often this also involves delivery of technical schematics – techvis – for how a shot could be filmed. And then they tend to regularly get involved after the shoot to do postvis, where again it’s necessary to deliver quickly.

While previs studios have had to be nimble, new real-time rendering tools have now enabled them to be even more so. Plus, using the same tools coupled with virtual production techniques allows previs studios to deliver even more in the shot planning and actual shooting process.

For example, previs and virtual production on the Disney+ series The Mandalorian adopted real-time workflows in a whole new way. Here, a ‘volume’ approach to shooting in which actors were filmed against real-time rendered content on LED walls was undertaken. That content went through several stages of planning that involved traditional previs, a virtual art department and then readying for real-time rendering using Epic Games’ Unreal Engine on the LED walls. The studios involved in this work included ILM, The Third Floor, Halon Entertainment, Happy Mushroom and Mold3D Studio.

The Mandalorian is just one of the standout projects where previs, virtual production and real-time rendering are making significant in-roads. Here’s how a bunch of previs studios, including those that worked on the show, have adapted to these new workflows.

Like many previs studios, Halon Entertainment relied for many years almost solely on using Maya and its native viewport rendering for previs. However, the studio did dabble in real-time via MotionBuilder for mocap, the use of ILM’s proprietary previs tool ZVIZ on the film Red Tails, and with Valve’s Source Filmmaker.

When Epic Games’ Unreal Engine 4 was released, Halon jumped on board real-time in a much bigger way. “We saw the potential in the stunning real-time render quality, which our founder Dan Gregoire mandated be implemented across all visualization projects,” relays Halon Senior Visualization Supervisor Ryan McCoy. “War for the Planet of the Apes was our first previs effort with the game engine, and our tools and experience have grown considerably since. It has been our standard workflow for all of our visualization shows for a few years now.”

“We also have virtual production capabilities where multiple actors can be motion-captured and shot through a virtual camera displaying the Unreal rendered scene. This can work through an iPad, or a VR headset, allowing the director to run the actors through a scene and improvise different actions and camera moves, just as they would on a real set.”

—Ryan McCoy, Senior Visualization Supervisor, Halon

Modeling and keyframe animation for previs still happens in Maya and is then exported to Unreal. Here, virtual rigs to light the scene can be manipulated, or VR editing tools used to place set dressing. “We also have virtual production capabilities where multiple actors can be motion-captured and shot through a virtual camera displaying the Unreal rendered scene,” adds McCoy. “This can work through an iPad, or a VR headset, allowing the director to run the actors through a scene and improvise different actions and camera moves, just as they would on a real set.

“Aside from the virtual production benefits, these real-time techniques also have some significant ramifications for our final-pixel projects,” continues McCoy. “Being able to re-light a scene on the fly with ray-traced calculations allows us to tweak the final image without waiting hours for renders. Look development for the shaders and FX simulations are also all done in real-time.”

One of Halon’s real-time previs projects was Ford v Ferrari, where the studio produced car race animatics that had a stylized look achieved with cel-shaded-like renders – the aim was to cut easily with storyboards. “We utilized Unreal’s particle systems and post processes to make everything fit the desired style while still timing the visuals to be as realistic as possible,” outlines Halon Lead Visualization Artist Kristin Turnipseed. “That combined with a meticulously built LeMans track and being faithful to the actual race and cornering speeds allowed us to put together a dynamic sequence that felt authentic, and allowed us to provide detailed techvis to production afterward.”

“From the beginning, we could see the huge potential of using the engine for previs and postvis, and it only took us a few weeks to decide we needed to invest time creating visualization tools to be able to take full advantage of this method of working.”

—Janek Lender, Head of Visualization, Nviz

The Third Floor has also adopted real-time in a major way, for several reasons, according to CTO and co-founder Eric Carney. “First, the technology can render high-quality visuals in real-time and works really well with real-world input devices such as mocap and cameras. We think a hybrid Maya/Unreal approach offers a lot of great advantages to the work we do and are making it a big part of our workflow. It allows us to more easily provide tools like virtual camera (Vcam), VR scouting and AR on-set apps. It lets us iterate through versions of our shots even faster than before.”

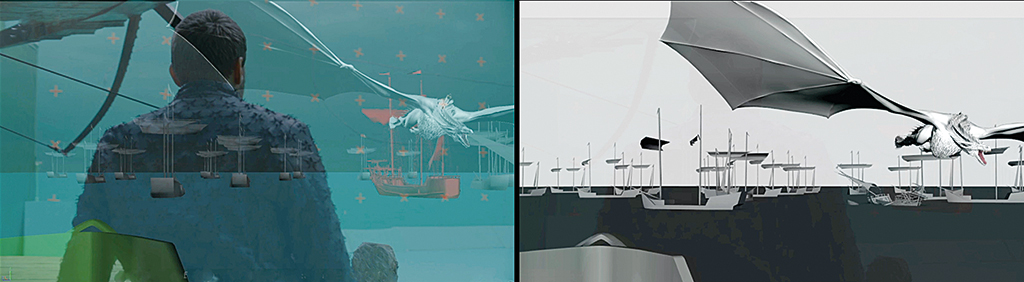

The Third Floor has recently leveraged real-time in visualization work on For All Mankind, as well as location-based attractions. A significant project in which the company combined several real-time and virtual production approaches was for the final season of Game of Thrones. Here, a simul-cam setup for a Drogon ship attack scene, and VR set scouting for King’s Landing, helped the show’s makers and visual effects teams plan and then complete complex shots that involved a mix of live action and CG.

“For the Euron vs. Drogon oner,” says Carney, “we deployed a range of techniques led by Virtual Production/Motion Control Supervisor Kaya Jabar. We used the TechnoDolly as our camera crane and to provide simul-cam. We pre-programmed the camera move into the Technocrane based on the previs, which The Third Floor’s team headed by Visualization Supervisor Michelle Blok had completed. The TechnoDolly provided us with a live camera trans/rotation that we fed into MotionBuilder to generate the CG background. We also set up an LED eyeline so the actor knew where the dragon was at all times.”

The virtual set scouting approach for King’s Landing included imagining a large street scene. “The street set was going to be a massive, complicated build, and everyone wanted to make sure it would work for all the scenes that were going to be filmed on it,” states Carney. “Our artists were embedded in the art department and were able to convert their models into Unreal for VR scouting. The directors used the VR to pre-scout the virtual set and check for size and camera angles.”

Then, the studio’s VR scouting setup was adopted by production to craft an animatic for the show’s Throne Room sequence, where Drogon burns the throne and retrieves Daenerys. The Third Floor artists animated blocking, while the DP used the camera viewfinder tool in the studio’s VR system to ‘film’ the shots. Says Carney: “We visualized Drogon’s animation across the scene in VR and then moved the shots into regular previs and then finally transferred Drogon’s performance to an on-set Ncam system, allowing the directors and crew to see the ‘dragon in the room.’ Casey Schatz, Head of Virtual Production at The Third Floor, used the real-time composite together with floor marks derived from techvis to provide accurate cues for the actor and eyeline pole operator.”

Nviz (a rebrand of VFX studio Nvizible and previs studio Nvizage) began utilizing Unreal Engine 4 about three years ago, initially just as a rendering tool. Now, with projects in the works such as The Witches, Morbius and The King’s Man, the studio has become a major realtime user.

“From the beginning,” advises Nviz Head of Visualization Janek Lender, “we could see the huge potential of using the engine for previs and postvis, and it only took us a few weeks to decide we needed to invest time creating visualization tools to be able to take full advantage of this method of working.

“Right now,” notes Lender, “we are merging our previs and Vcam setups so that we can offer the shot creation back to the filmmakers themselves. We also have tools that allow us to drive characters within the engine from our motion capture suits, and this way we can have complete control over the action within the scene. In essence, this offering constitutes an extremely powerful visualization toolset, which can be used to virtually scout sets, to visit potential locations which could be used for backplates, to create shots by shooting the action live (using the camera body and lens sets chosen by the DP), and then to edit the shots together there and then to create the previsualized sequences of the film.

“Adopting a real-time technique has increased the speed of our workflow and offered far more richness in the final product, such as real-time lighting, real-time effects, faster shot creation, higher quality asset creation, improved sequence design, and many other improvements that we could not have achieved with our legacy workflow.”

During its history in visual effects production, DNEG has dived into previs and virtual production on many occasions. But now the studio has launched its dedicated DNEG Virtual Production, made up of specialists in VFX, VR, IT, R&D, live-action shooting and game engines to work in this space. Productions to which DNEG has delivered virtual production services for include Denis Villeneuve’s Dune and Kenneth Branagh’s Death on the Nile.

“Our current virtual production services offering includes immersive previsualization tools – virtual scouting in VR and a virtual camera solution – and on-set mixed reality tools for realtime VFX, including an iPad app,” outlines DNEG Head of Virtual Production Isaac Partouche. “Our immersive previs services enable filmmakers to scout, frame and block out their shots in VR using both the HTC VIVE Pro VR headset and our own virtual camera device.

“DNEG has been focused on developing a range of proprietary tools for its virtual production offering, creating a suite of tools developed specifically for filmmakers,” adds Partouche, who also notes that the studio’s real-time rendering toolset is based on Unreal Engine. “We have consulted with multiple directors and DPs during the development process, taking on board their feedback and ensuring that the tools are as intuitive as possible for our filmmaking clients.”

“First, the technology can render high-quality visuals in real-time and works really well with real-world input devices such as mocap and cameras. We think a hybrid Maya/Unreal approach offers a lot of great advantages to the work we do and are making it a big part of our workflow. It allows us to more easily provide tools like virtual camera (Vcam), VR scouting and AR on-set apps. It lets us iterate through versions of our shots even faster than before.”

—Eric Carney, CTO/Co-founder, The Third Floor

John Griffith is one of the leading proponents of game engine use in previs. He was the Previs Director at 20th Century Fox for six years. In 2009 he helped Crytek develop Cinebox, which was the first game engine developed for previs. Early in production on Dawn of the Planet of the Apes, Griffith oversaw a game-engine-rendered proof of concept previs teaser for the film, the first of its kind.

Griffith now runs previs outfit CNCPT, which has fully adopted game engines for previs, as well as VR scouting tools. Some of this work has also led to the creation of final shots. For example, when approached by Zoic Studios to craft layout and previs for a video game segment in the Seth Rogen-produced Future Man series, CNCPT found that it was in a good position to actually produce the final shots. “It was a video game… and we do all our work in a video game engine,” notes Griffith. “Fortunately. the producers at Zoic were really open to it.”

The motion of the gameplay was pre-generated motion capture or hand animation. Assets were animated in Maya and then brought into Unreal Engine. “We approached it as we would for any other previs sequence,” says Griffith. “We started with rough storyboards and we fashioned it to look and feel like a next-gen video game.

“It was a hyper-realized version of a video game and we could go as bloody as we wanted,” continues Griffith. “Characters explode and body parts fly and there is way more blood than normal. At one point the hero character gets his arms ripped off and beaten with them, and shot in the crotch. That was an idea that we snuck in there and Seth loved it! Since it was previs and final all rolled into one, we could present ideas exactly as they would be represented in the show, so it went very fast. I think we produced all of the elements from start to finish in about a month.”