By TREVOR HOGG

By TREVOR HOGG

One of the leading artists incorporating AI into his creative process is Refik Anadol who created a sculpture inspired by high-frequency radar data collections called Bosphorus. (Image courtesy of Refik Anadol)

Much has been made lately of the proliferation of artificial intelligence within the realm of art and filmmaking, whether it be AI entrepreneur Aaron Kemmer using OpenAI’s chatbot ChatGPT to generate a script, create a shot list and direct a film within a weekend, or Jason Allen combining Midjourney with AI Gigapixel to produce “Théâtre D’opéra Spatial,” which won the digital category at the Colorado State Fair. Protests have shown up on art platform ArtStation consisting of a red circle and line going through the letters AI and declaring ‘No to AI Generated Images,’ while U.K. publisher 3dtotal posted a statement on its website declaring, “3dtotal has four fundamental goals. One of them is to support and help the artistic community, so we cannot support AI art tools as we feel they hurt this community.”

“There are some ethical considerations mainly about who owns data,” notes Jacquelyn Ford Morie, Founder and Chief Scientist at All These Worlds LLC. “If you put it out on the web, is it up for grabs for scrubbers to come and grab those images for machine learning? Machine learning doesn’t work unless you have millions of images or examples. But we are out at an inflection point with the Internet where there are millions of things out there and we have never put walls around it. We have created this beast and only now are we getting pushback about, ‘I put it out to share but didn’t expect anyone would just grab it.’”

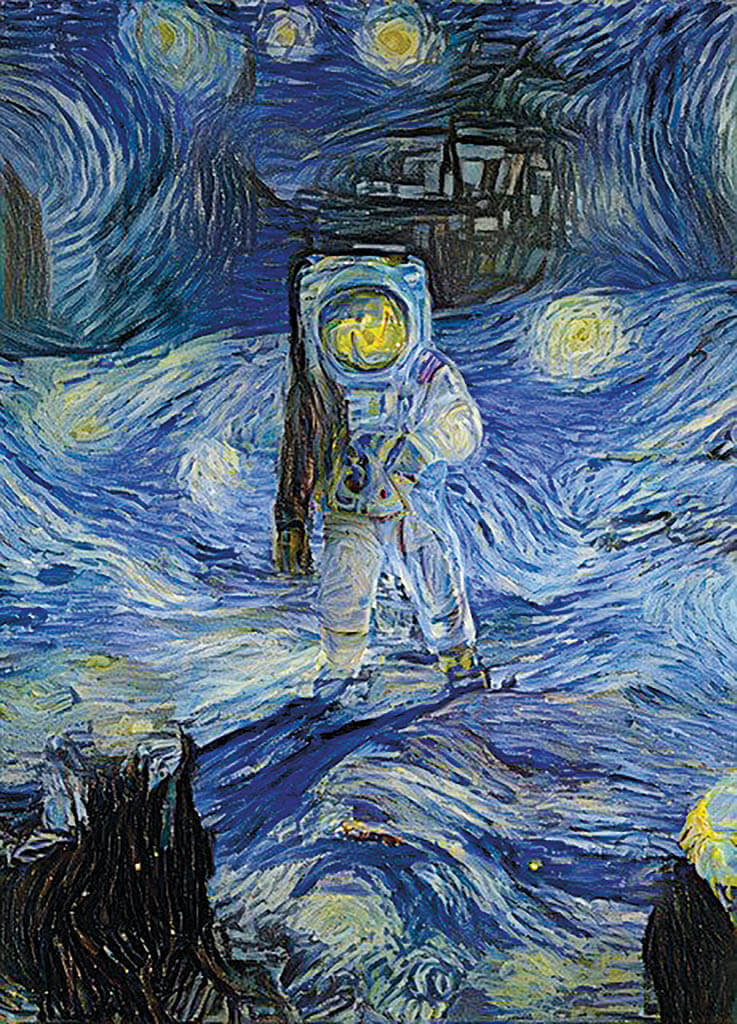

OPPOSITE TOP LEFT: The iconic photo of Buzz Aldrin on the moon, taken by Neil Armstrong on the 1969 Apollo 11 mission, reimagined in the style of Van Gogh’s Starry Night. This is one of the earliest artworks ever made with NightCafé Creator, and still one of the best. (Image courtesy of Night Studio Café)

‘In the style of’ text prompts are making artists feel uneasy about their work being replicated through an algorithm as in the case of Polish digital artist Greg Rutkowski, who is one of most commonly used prompts for open-source AI art generator Stable Diffusion. “I just feel like at this point it’s unstoppable, and the biggest issue with AI is the fact that artists don’t have control of whether or not their artwork get used to train the AI diffusion model,” remarks Alex Nice, who was a concept illustrator on Black Adam and Obi-Wan Kenobi. “In order for the AI to create its imagery, it has to leverage other artist’s collective ‘energy’ [and] years of training and dedication to a craft. Without those real artists, AI models wouldn’t have anything to produce. I believe AI art will never get the artistic appreciation that real human-made art gets. This is the fundamental difference people need to understand. Artists create things, and hacks looking for a shortcut only know how to ‘generate content.’”

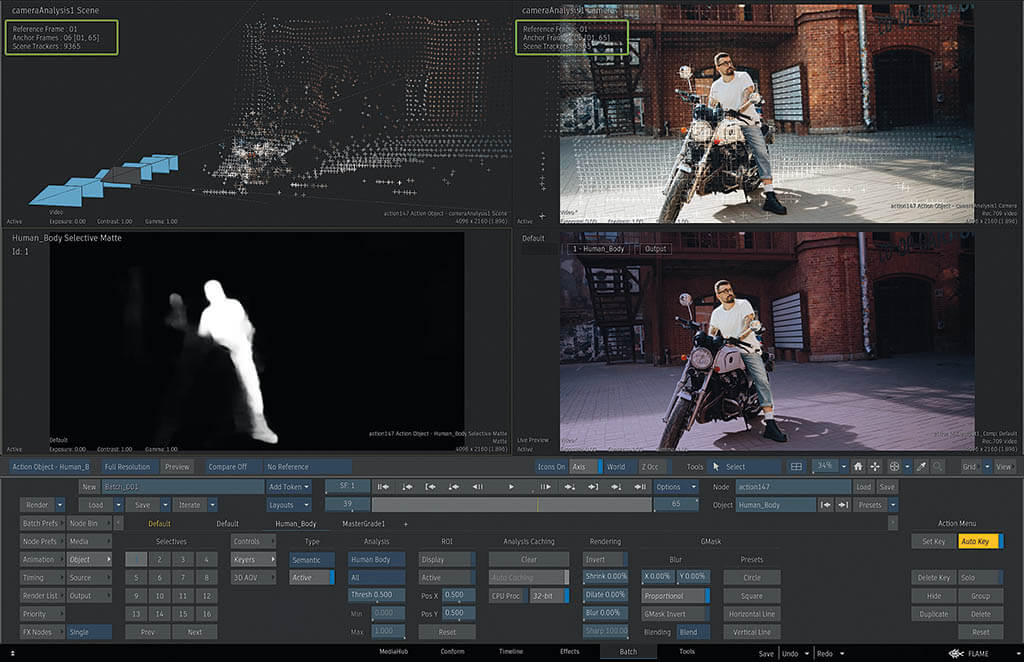

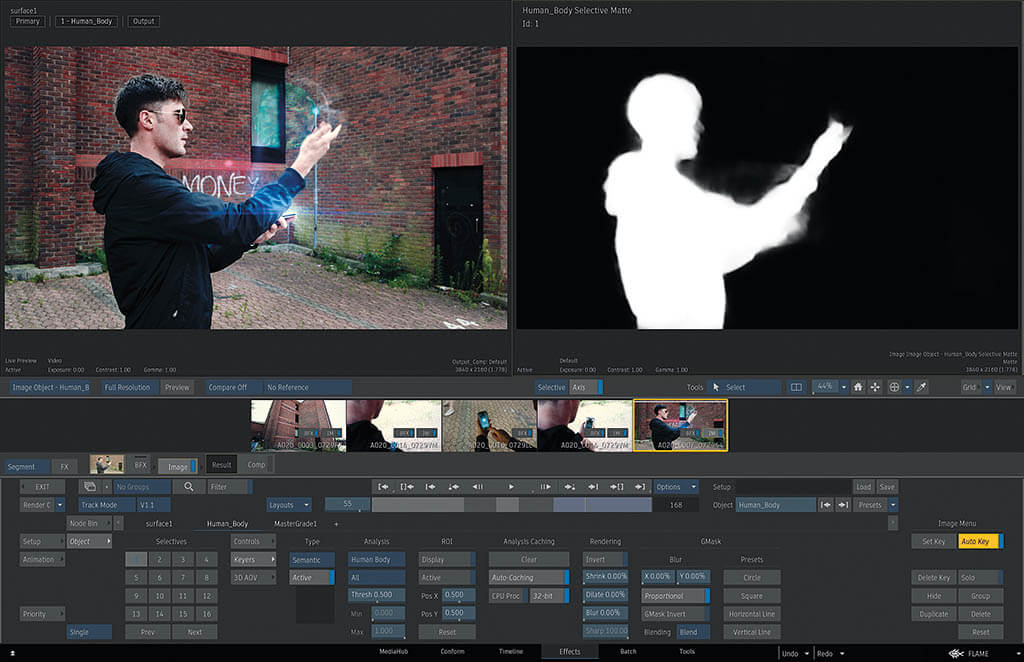

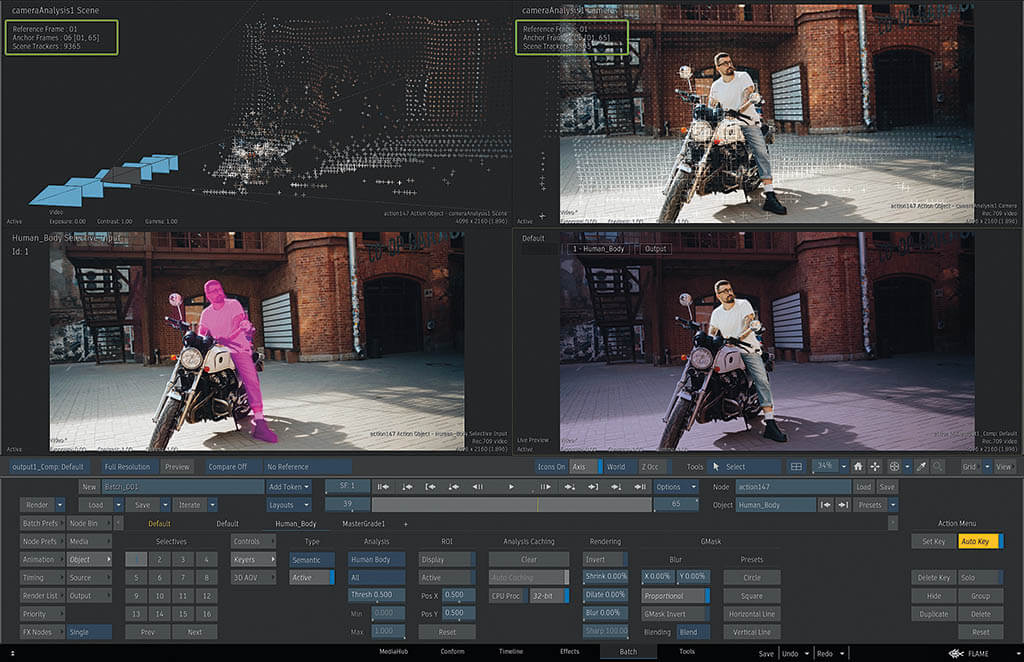

Rather than rely on the Internet, AI artists like Sougwen Chung are training robotic assistants on their own artwork and drawing alongside them, which follows in the footsteps of another collaboration that lasted 40 years and produced the first generation of computer-generated art. “There was some interesting stuff going on there that nobody knows about in the history of AI and art,” observes Morie. “Harold Cohen and the program AARON, which was an automatic program that could draw with a big plotter that learned as it drew and made these huge, beautiful drawings that were complex and figurative, not abstract at all.” AI is seen as essential element for developing future tools for artists. “Flame’s new AI-based tools, in conjunction with other Flame tools, help artists achieve faster results within compositing and color-grading workflows,” remarks Steve McNeill, Director of Engineering at Autodesk. “Looking forward, we see the potential for a wider application of AI-driven tools to enable better quality and more workflow efficiencies.”

Trevor Hogg experimenting with DreamStudio by Stability AI to create a futuristic environment by using prompts such as steampunk city block, nighttime, dance club, wet snow falling, in the style of Syd Mead and Ralph McQuarrie. (Image courtesy of Trevor Hogg)

Machine learning is seen as an essential element by Autodesk for developing future tools for artists and has been incorporated into Flame. (Image courtesy of Autodesk)

The following prompts were entered into AI Image Generator API by DeepAI: An angel with black wings in a white dress holding her arms out, a digital rendering, by Todd Lockwood, figurative art, annabeth chase, at an angle, canva, global radiant light, dark angel, 3D (Image courtesy of DeepAI)

The following prompts were entered into AI Image Generator API by DeepAI: A girl in a white dress and a blue helmet, a detailed painting, by wlop, fantasy art, paint tool sai!! blue, [mystic, nier, detailed face of an asian girl, blueish moonlight, chloe price, female with long black hair, artificial intelligence princess, anime, soft lighting, old internet art. (Image courtesy of DeepAI)

Does the same reasoning apply to the creation of text-to-image programs such as AI Image Generator API by DeepAI? “When we saw the research coming out of the deep learning community around 2016-2017, we knew practically every part of our lives would be changed sooner or later,” notes Kevin Baragona, Founder of DeepAI. “This was because we saw simultaneous AI progress in very disparate fields such as text processing, gameplaying, computer vision and AI art. The same basic technology [neural networks] was solving a whole bunch of terribly difficult problems all at once. We knew it was a true revolution! At the time, the best AI was locked away in research labs and the general public didn’t have access to it. We wanted to develop the technology to bring magic into our daily lives, and to do it ethically. We brought generative AI to the public in 2017. We knew that AI progress would be rapid, but we were shocked at how rapid it turned out to be, especially starting around 2020.” Baragona sees AI as having positive rather than negative impact on the artistic community. “We’ve seen that text-to-image generators are practically production-ready today for concept art. Every day, I’m excited by the quality of art that takes a couple seconds to produce. Visual effects will continue to get more creative, more detailed and much cheaper to produce. Basically, this means we’ll have vastly more visual effects and art, and the true artists will be able to create superhuman art with the aid of these computer tools. It’s a revolution on par with the rise of CGI in the 1990s.”

Undoubtedly, there are legal issues as to who owns the output and whether the original sources should be given credit. “As the core building blocks of new AI generative models continue to mature, a new set of questions will arise, like it has happened with many other transformative technologies in the past,” notes Cristóbal Valenzuela, Co-Founder and CEO at Runway. “Ownership of content created in Runway is owned by users and their teams. Models can also be retrained and customized for specific use cases. We are also building together a community of artists and creators that inform how we make product decisions to better serve those community needs and questions.” The AI revolution is not to be feared. “There are always questions that emerge with the rise of a new technology that challenges the status quo.” Valenzuela observes. “The benefits of unlocking creativity by using natural language as the main engine are vast. We will see so many new people able to express themselves through various multimodal systems, and we’ll see previously complicated arenas like 3D, video, audio and image be more accessible mediums. Tools are only as good as the artist who wields them, and having more artists is ultimately an incredible benefit to the industry.”

Should AI art be the final public result? “I think that the keyword prompts used should also be displayed along with it, and any prompt that directly references a notable piece of existing art or artist should require a licensing deal with that referenced artist,” remarks Joe Sill, Founder and Director at Impossible Objects. “For instance, if an AI art is displayed that has utilized the prompt of ‘a mountaintop with a dozen tiny red houses in the style of Gregory Crewdson.’ you’re likely going to need to have that artist’s involvement and sign-off in order for that art to be displayed as a final result. If AI art is simply used in a pipeline as a starting point to inspire an artist with references, I think it’d be great for the programs themselves to start being listed in credits. Apps like Midjourney or DALL·E being credited as the key programs that help artists develop early inspiration only helps with transparency and also accessibility. If an artist releases a piece of work that was influenced by AI art, they can credit the programs used like, ‘Software used: DALL·E, Adobe Photoshop, Sketchpad.’”

AI has given rise to a new technological skill in the form of “the person who can write a compelling prompt for a program like DALL·E 2 to extract a compelling image,” states David Bloom, Founder and Consultant at Words & Deeds Media. “To some extent it’s a different version of what artists have always faced. If you are a musician, you had to learn how to play an instrument to able to reproduce the things that you were hearing in your head. I remember George Lucas saying in the 1990s when they put out a redone version of Star Wars, ‘I’m never going to show the original version again because the technology now allows me to create a film that matches what I saw in my head.’ It’s just like that. The technology is going to allow new kinds of people to see something that they have in their head and get it out without necessarily having the same or any technical skills, if they can articulate it.”

Part of the fear of AI comes from misunderstanding. “It’s not as powerful as people think it is,” notes Jim Tierney, Co-Founder and Chief Executive Anarchist at Digital Anarchy. “It’s certainly useful, but in the context how we use AI, which is for speech-to- text, if you have well-recorded audio and someone who speaks well, it’s fantastic but falls off the cliff quickly as the audio degrades. There is a lot fear around AI. We were supposed to have replicants by now! Blade Runner was set in 2019. Come on. Where are my flying cars?” As for the matter of licensing rights, Tierney references what creatively drives artists in the first place. “If you say, ‘Brisbane, California, at night like Vincent van Gogh would have done,’ that’s going to create something probably Starry Night-ish. But how is that different from me painting it using that visual reference and an art book? It’s complicated. I spent a bunch of time messing around with Midjourney. If you go in there looking for something specific and say, ‘I want X. Create this for me.’ You will have to go through many iterations. People can make some cool stuff with it, but it seems rather random.”

What is affecting the quality of AI art is not the technology. “People have this idea that you type in three simple words and get some sort of masterpiece,” observes Cassandra Hood, Social Media Manager at NightCafé Studio. “That’s not the case. A lot of work goes into it. If you are planning on receiving a finished image that you are picturing in your mind, you’re going to have put the work into finding the right words. There is a lot of experimenting that goes with it. It’s a lot harder than it seems. That applies to many people who think it’s not as advanced or not too good right now. It can be good if you put the work in and actually practice your prompts. We personally don’t create the algorithms, but give you a platform and an easy-to-use interface for beginners who move onto the bigger more complicated code notebooks once they graduate from NightCafé. We are focusing on the community aspect of things and making sure to provide that safe environment for AI artists to hang out and talk about their art. We have done that on the site by adding comments and contests.”

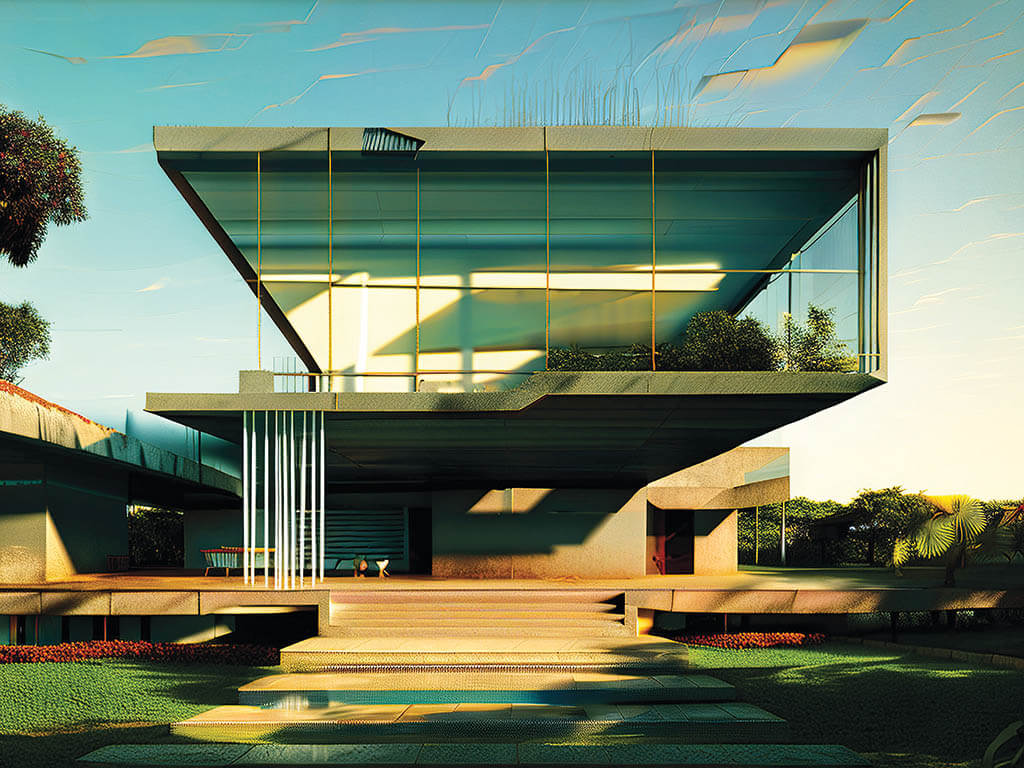

This particular Refik Anadol image was commissioned by the city of Fort Worth, Texas. (Image courtesy of Refik Anadol)

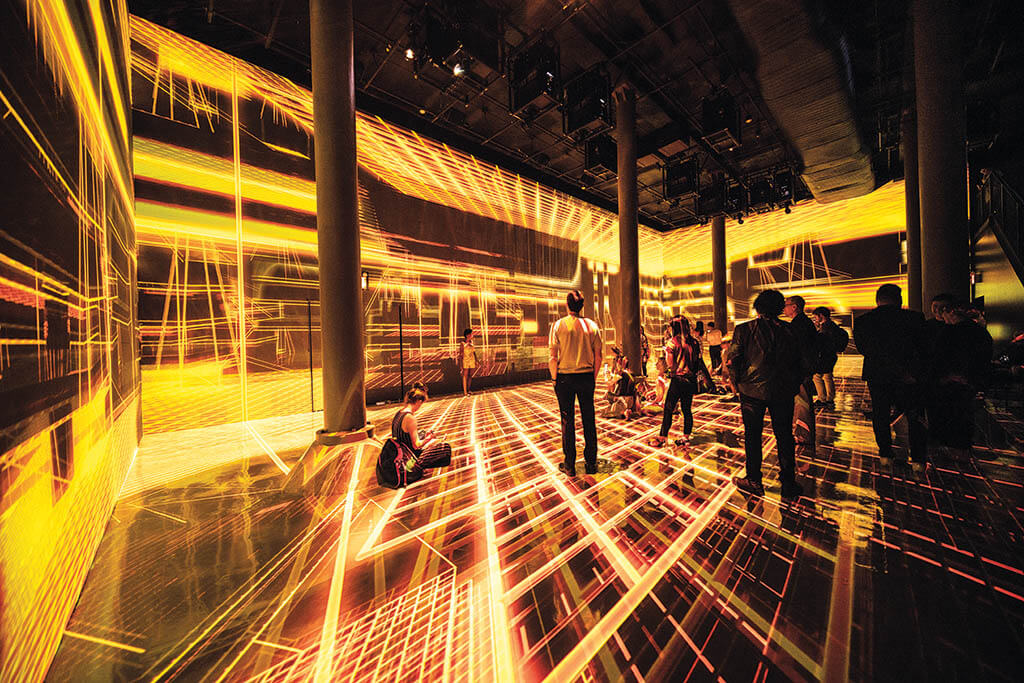

An interactive art exhibit created by Refik Anadol that utilizes AI. (Image courtesy of Refik Anadol)

Refik Anadol created projections to accompany the Los Angeles Philharmonic Orchestra performing Schumann’s Das Paradies und die Peri. (Image courtesy of Refik Anadol)

“If you put it out on the web, is it up for grabs for scrubbers to come and grab those images for machine learning? Machine learning doesn’t work unless you have millions of images or examples. But we are out at an inflection point with the Internet where there are millions of stuff out there and we have never put walls around it. We have created this beast and only now are we getting pushback about, ‘I put it out to share but didn’t expect anyone would just grab it.’”

—Jacquelyn Ford Morie, Founder and Chief Scientist, All These Worlds LLC

Example of completely AI generated character concept art by Joe Sill of Impossible Objects, created through Midjourney’s new V4 update which “makes people look like people.” (Images courtesy of Impossible Objects)

“My opinion on this AI revolution has changed,” acknowledges Philippe Gaulier, Art Director at Framestore. “It has probably been a year or year and a half ago that AI has really exploded in the concept art world. At the beginning I thought, ‘Oh, my god, our profession is dead.’ But then I realized it’s not because I saw the limits of AI. There is one factor that we shouldn’t forget, which is the human contact. Clients will never stop wanting to deal with human beings to produce some work for whatever film or project that they have. However, there will be less of us to produce the same amount of work in the same way when tools in any industry evolve to become more efficient. The tools haven’t replaced people because people are still needed to supervise and run them because machines don’t communicate like we do. But there has been a reduction in the number of people for any given task. I have been in this industry long enough to understand that things evolve all of the time. I have already started playing around with AI for references. I’m not asking myself whether it’s good or bad. I’ve accepted the idea that is going to be part of the creative process because human beings in general like shortcuts.”

In the middle of the AI revolution is Stable Diffusion, which was created by researchers at Ludwig-Maximilians University of Munich and Runway ML, and supported by a compute grant from Stability AI. The release of the free, open-source neural network for generating photorealistic and artistic images based on text-to-images was such a resounding success that Stability AI was able to raise $101 million in funding for its open-source AI research, which involves other types of diffusion models for music, video and medical research. “A research team led by Robin Rombach and Patrick Esser was looking at ways to communicate with computers and did different experiments looking at text-to image,” remarks Bill Cusick, Creative Director for DreamStudio, run by Stability AI. “Their goal was to get to a place where instead of being limited by your physical abilities you could be able to translate your ideas into images. It has evolved in a way where now we can see what is possible, and there is a bifurcation of what the approaches are. Stable Diffusion and DreamStudio are tools. DreamStudio gives you settings and parameters to control image generation. I had a meeting with an indie studio creating a workflow using Stable Diffusion, and it’s as complex as a Hollywood workflow would be, and the results are incredible. There are also people authoring Blender and Unreal Engine plug-ins, and I’ve reached out to as many community devs as possible to help accelerate their development, and I hope more folks get in touch.”

Stability AI Creative Director Bill Cusick experimenting with the possibilities of AI art. (Images courtesy of Stability AI)

Cusick adds, “AI is never going to whole cloth recreate someone’s picture. By the time this article comes out, there is going to be text-to-video, and the question of did my art get stuck into a dataset of billions of images is meaningless when the output is animation that is unique and moving in a totally different way than a single image. I agree with all of the problems with single images and the value of labor. But it’s momentary. We are moving towards a procedurally generated future where there is a whole other method of filmmaking coming.”

“I want concept artists to treat [AI] as a tool because it’s going to be more powerful in their hands than anybody else’s.”

—Bill Cusick, Creative Director, Stability AI